Jennifer Story

PROJECT

CAVE

The multi-device collaborative audio/video experiences

with AI composition

My Contribution

Conducted UX research, development, and prototype

As a lead designer, worked with the project leader, SW engineers, and design intern

Duration

Jan ~ May 2023 (Phase 1)

Project Introduction

Find the best flexibility and quality with the use of multiple devices for content creations.

Phone cameras have greatly improved over the years, especially in how they handle light, colors, and details. Users can enjoy different features like HDR, portrait, 3D, low-light photography, and zoom. These features help users create high-quality content in different situations and environments.

Another trendy feature is the collaborative use of multiple cameras with smartphones, which gives users more options and perspectives for capturing the world around them. We aim to offer the best flexibility and quality for content creation based on user needs and challenges.

Project Goal

Build an ecosystem for multi-device synchronized video experiences and implement AI-powered on-device editing capability.

Integrate multi-device ecosystem

-

Building an ecosystem to increase workflow efficiency

-

Cloud & local synchronization

-

Ensure compatibility across multi-platform support

Enhance collaboration and flexibility

-

Features added for flexible and collaborative interaction

-

Leverage AI for editing to reduce the user time and effort

Maximize brand advocacy

-

Catering to a broad range of users will adopt as part of their everyday use devices

-

Revenue generation by offering core features for frr but create premium subscription tier

My Responsibility on UX research,

-

Generated survey questions, distributed surveys in a closed environment

-

Conducted focus interviews with two video creators,

-

Defined target users, and analyzed market competitors to set the project's UX goal and scope.

User Survey and Focus Interview ,

Conducted the field survey, observation, and focus- interview to understand how content creators interact with devices, real pain points, and challenges

Define Users,

Brainstormed people's behaviors why they use multiple devices

Reviewed existing research, report, and data to gather insights about user's review, pain points and preferences of using device

Identify Needs,

Personas,

Define needs and challenges in terms of user experience based on their background data, behavior, and emotional feelings to focus on direction of development

User Journey,

Analysis Competitors,

Analyzed multi-view streaming competitors for the market landscape

-

Phone manufacturers with their native App

-

3rd party software applications

Finding (SWOT) from User & Behavior,

1. Strength: users use multi-mobile devices for creative tasks requiring the capability of productivity, creativity, collaboration, and communication across devices.

2. Weakness: less intuitive data sharing interface among multi-devices in a group setting, and consumes a huge amount of time editing and maintaining content

3. Opportunity: Target creators and influencers to offer an effective experience for creating consistent content and native AI video editing tools to do the rest, and the users do theirs.

4. Threats: AI video editing apps are proliferating, but how can users' ideas and intentions be more accurately represented.

My Responsibility on Design Process

-

Set UX strategy and design scope

-

Addressed problems and developed solutions including key features and UI prototype

UX Strategy,

-

Build an ecosystem for

multi-device experience.

-

Real-time collaboration UX

-

AI-powered on-device video editing capability for productivity

Design Scope,

Developing User Experiences

User Flow & Key UX,

Discovery Collaborators

Capture Together

AI Composition

Enhance multi-device connectivity

-

Simplify steps of proximity connection for collaborators

-

Awareness of the device's relative position

-

Secure and seamless raw data sharing among collaborators

Add intuitive features for capturing stage

-

Real-time streaming feed switching among collaborative devices

-

Synchronization of capture setting to another device

(i.e. mode, ratio, filter, etc)

Minimize user effort for creation

-

Users do, and then AI recomposed for the rest quickly for the best storytelling

-

Adapt user preferences and

context as AI editing criteria

(i.e. gallery/photo app data, device contact face information, search data, etc.) -

Offer creative freedom with manual user iteration

UI Prototypes,

Discovery Collaborators

Integrate 'collaboration mode' on Camera app for multi-device connection

-

Identify participants with trusted contact lists (Tel# or email)

-

Find a nearby presence with peer to peer connection, not cloud-based, that allows collaborative video capturing

-

Create participant groups by connecting frequently

Awareness of the device's relative position

-

Device camera synchronization wirelessly

-

Relative-location directional cues among each collaborated devices

Proximity connection

-

My screen

Capture Together

Switch camera feed in real-time between collaborative devices

-

Provide an interface to preview other camera feeds while taking video

Bring other device feed

to my screen with audio

Interface for connected device previews at once

My screen

AI Composition

Auto-transfer of raw footage among collaborative devices

-

Seamlessly store all footage on each collaborative devices with the original quality

-

Output on each device id different based on users preferences, and device settings

AI-generated version

-

Auto-cut & highlight detection: AI recomposition by finding the best scenes among all footage and creating the best storytelling

-

Adapting users' interests for editing: device personal resources such as their own personal photo /video, contacts, search data, the context of capture time/location, etc.

-

AI tools: remove obstruction in the field of view and background, smart filter and style, and transition

Synchronize the capture setting

-

Screen orientation, ratio, stylized tone(filter), white balance, etc.

Manual iteration

-

Manual edit by cutting and grabbing into track

AI-generated version

own footage

Raw footage from other collaborative devices

Capture assistance

Proposed extended UX solutions and scenarios

-

Auto-reframe the angle/posture by recognizing the object/person and speech context.

-

Constant enhancement by capturing user behavior and engaging the user's attention

-

Possible use cases: streaming live shopping, online cooking class, vlogger, etc

Auto reframe by voice context

Camera B

Camera A

Camera C

Auto-reframe by tagging of the object/person

Dynamic content Creation for brand advertising, showcase, and campaign.

-

Switch effortlessly between various perspective shots combining drone, 360 camera and smartphone close-up or wide-angle shots.

-

Live collaboration between other influencers or audiences

Cinematographic with AI-driven stitch

-

Create an extended filmmaking and storytelling

-

Smooth transition of the different angles simultaneously by filling in gaps or overlapping the transition of sequence

-

Synthesize the missing portions of the scene to create a visual content coherently

-

Involving the motion tracking, content generation and style matching

2D static convert to 3D spatial next-generation video

-

Blurring the lines between photography and videography

-

3d depth for perspective, relative position, and object size for animating and interactivity

-

Use cases for product 3D-visualization, architectural walk-through, interactive storytelling

Technical Feasibility Study (Validation for AI composition)

AI-generative video output from collaborative footage (POC from Engiineer team)

Technical approaches

- How to decide what will be shown from multiple camera feeds at capture time?

- How to interpret what is important from different viewpoints and focus?

- How to automatically create a video composition?

Interviewer & Interviewee

People Dance

Reaction and body movement for indicating what is attentioned

Audio cues for indicating who is talking

Technical Solutions

-

AI finds and combines the best scenes and audio sources from footage to create the perfect storytelling version

-

User attention prediction based on the user's on-device metadata

(photo gallery, frequent contacts)

-

Use the front camera to detect gaze prediction by monitoring on-screen users' gazes and facial expression

-

Monitor the user's action of switching views, pause, device shake, zoom in/out, and user location change during capture time

-

On-chip semantic segmentation from capture time

-

Shot clustering based on shot type, object of interest, and learning user patterns

Device 1

Device 2

Device 3

Outputs Prototype

AI-recomposition

AI captures holistic moments with greater creative freedom with collaborative Audio Video Experience

AI-recomposition

Success Metrics & Result

- Humans can edit videos for 20 minutes for 52 seconds of video output

- AI optimization for 2 seconds, clip stitching for 40 seconds, and facial appearance reward calculation for 13 seconds

total less than 1 min.

Conclude,

CAVE Product Values

Value to the business

-

Camera, as an ecosystem of multi-device collaboration, brings Sam*** to the market for the majority of content creation, photography, and videography to cater to a broad user base.

-

Offer core features for free but create a premium subscription model with AI-based features.

-

Leveraging underlying Sam*** technologies like advanced camera HW, connectivity, AI-generative technology, and unlimited power of people enables the creation of a market-unique opportunity.

-

Catering to the content creator's preferences and solving the pain points can boost brand loyalty, gain competitive advantages, and sell more smartphone.

Value to the Users

-

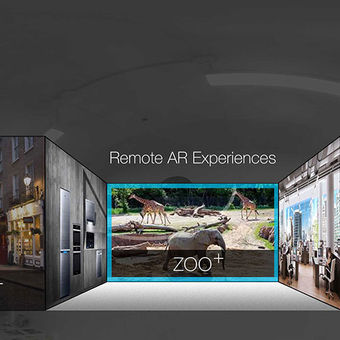

Creating a multi-device collaboration ecosystem enables a more efficient, productive, effective, and smooth working environment, even in a physically separated location.

-

A cross-device ecosystem for collaboration may benefit EdTech platforms, Telehealth, and marketing agencies.

3D video